M6 Competition And Worse-Than-Random Participants

The year-long M6 competition has ended. In this article, I include my opinions about the competition, and investment competitions in general, and an analysis of the results.

About Investment Competitions

In my opinion, investment or trading competitions with no money at stake are akin to paper trading and the results may not be representative of an investment/trading environment with real money at stake. Price action has an impact on the psychology of the market participants, both discretionary and systematic. Therefore, at a fundamental level, no significant conclusions can be derived from paper trading and more importantly, differentiating between skill and luck is much more difficult than in the case where money is at stake and participants have skin in the game.

Furthermore, in my opinion, paper trading competitions cannot provide any insight into the validity of the Efficient Market Hypothesis (EMH), as the organizers suggested. Even competition with real money for relatively short periods cannot offer any valuable information. On the M6 competition webpage, a reference was made to “beating” the market. The EMH is not about the inability to “beat” the market in a competition lasting one year but about doing this consistently. The results from a year of competition may include many outliers in the right tail of the distribution. However, EMH precludes the consistency of any particular outlier, and for sufficient samples, no participant can beat the market. Since in finance, sufficient samples are large, some market participants may have managed to take advantage of temporal anomalies, or market regimes, to make significant returns, or have been extremely lucky, as the fictional character and fund manager, Felix Grandluckmeister, has admitted.

Therefore, at a fundamental level, paper-trading competitions, even those that last for a year, cannot provide evidence for or against EMH, and even about the models/discretions used by winners because of luck of skin-in-the-game. These points, of course, may be debatable but the burden of proof is on those who claim they can conceive a paper-trading competition that can answer these fundamental questions. A more interesting subject is what the results in this particular case reveal about the participants and their performance.

Performance Criteria

The organizers of the conference used the arithmetic mean of the ranks of the Ranked Probability Score (RPS) and a variant of the Information Ratio (IR). While the IR variant, which is a variant of the Sharpe ratio with the risk-free rate set to zero, is a performance criterion used in investing, RPS can be a problematic measure because the true probability distributions are not known. Knowledge about the outcomes reveals nothing about their probabilities, other than the fact that they are not zero. Therefore, a high score may be penalizing better predictions and reward random ones. In my opinion, RPS is a problematic, and even esoteric score, and in the analysis below I will use only the Sharpe variant IR.

Analysis Of The Results

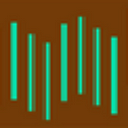

Based on the Leaderboard, below is the histogram of the IR for the 163 participant groups.

There are some extreme IR outliers, with the distribution having high excess kurtosis (2.24) and standard deviation (9.433), while the mean is negative at -3.06. Only 41.7% of the participants had a positive IR, the minimum was -29.18, and the maximum was 32.89.

I did not expect this leptokurtic distribution with a positive skew (0.234) and high standard deviation. I expected something more normal. My opinion is that this distribution reveals a mix of participants: a small fraction had an investment background, and a large fraction had no experience or decided to take high risks because they had nothing to lose but only gain. The optimal strategy under conditions of no real loss is to take the maximum risk. With high risks comes a high probability of large losses, and the result was a distribution with a very high standard deviation for the IR.

Random Decisions Simulation

The model I used for the random decision simulations is based on the trivial 1/N allocation and a fair coin toss to decide whether to go long or short at the end of every month for the duration of the contest. Specifically, I used the same universe of 100 stocks and ETFs, and at the close of each month, a fair coin was tossed. If the outcome was heads, a random participant went long the security for 0.01 allocation, and if the outcome was tails, the random participant went short the security for 0.01 allocation. Below is the histogram of the IR for 1000 simulations.

The distribution is “more” normal, as was expected, with a kurtosis of 0.886 and a skew of -0.219. The mean is 0.01, as also expected close to 0, and the standard deviation is 1.394. The minimum is -5.27 and the maximum is 5.59.

The vertical dotted line is at IR=2.21, which is the 5% threshold, i.e., 95% of random participants had an IR of less than 2.21.

Note that 47% of M6 participants scored worse than the top 5% of the random model participants. In addition, about 36% of the M6 participants had a lower IR than the minimum of the random model participants. These are quite disturbing results because there were many worse-than-random participants in the competition.

Also note that 18.4% of the M6 competition participants had IR greater than 2.21, or scored higher than 95% of the random model participants. Also, 18 M6 participants, or 11%, had higher IR than the maximum IR of the random model at 5.59. Here are some possibilities regarding those outliers:

- They have significant investment experience and/or a robust system.

- The decisions/systems were lucky due to a favorable regime.

- They took excessive risks and were rewarded, while lucky with decisions.

- They tossed biased coins and had a lucky streak.

There is no way of knowing whether the outlier performance is due to skill or luck other than repeating the competition with real money at stake for a few years.

Conclusion

Investment competitions without skin-in-the-game (real money at risk) cannot reveal much about investing in general but only about the participant’s performance. The M6 competition was no exception to this, in my opinion. A randomization study based on a trivial model showed that a good fraction of the participants in M6 were worse-than-random. This may be the result of taking excessive risks since they had nothing to lose or a result of other unknown factors. There were also many positive outliers, but it is impossible to know whether that was due to skill or luck.

You can follow me on Twitter: @mikeharriny